Unraveling the AI Black Box

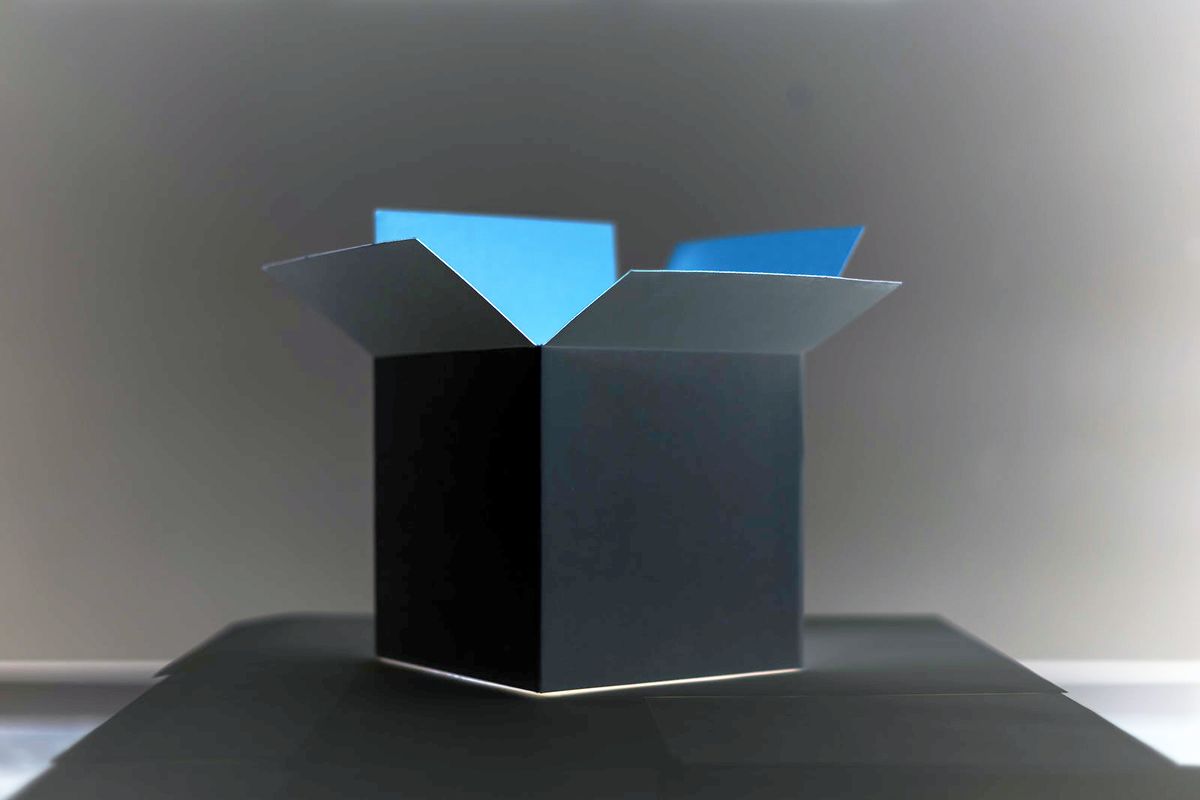

Artificial intelligence (AI) is everywhere, touching nearly every aspect of our lives. But for many, AI remains a mystery, a technology with mechanisms shrouded in what is often referred to as a "black box".

Artificial intelligence (AI) is everywhere, touching nearly every aspect of our lives. But for many, AI remains a mystery, a technology with mechanisms shrouded in what is often referred to as a "black box". But what does this term mean, and why is it crucial for us to shed light on this darkness?

The AI Black Box: A Closer Look

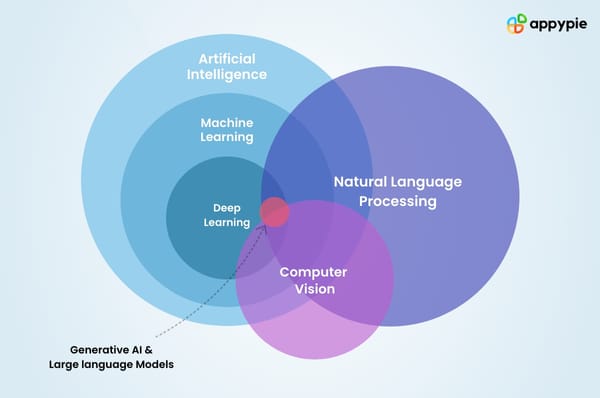

AI systems are designed to make decisions, sometimes incredibly complex ones. These decisions are based on algorithms that learn from data. The more data they consume, the better they become at making decisions. However, the intricate processes and computations that these algorithms perform are not always transparent, making AI systems hard to understand1.

This lack of transparency, often referred to as the "black box" problem in AI, raises several critical concerns. Without a clear understanding of how AI systems make decisions, we are left in the dark, unable to ensure accountability or responsibility for these AI systems' decisions.

Why AI Transparency Matters

AI transparency refers to the visibility of the inner workings of an AI system. It means having clear insights into how an AI system makes decisions, understands the factors that influence its decisions, and the circumstances that led to the outcome.

The AI community, including researchers, developers, and regulators, recognizes the importance of AI transparency. It is seen as an essential step towards building public trust and ensuring that AI systems are used responsibly. Transparency allows us to ensure that AI systems are not making decisions based on bias or discrimination, and that they respect user privacy and other ethical considerations2.

Obstacles to AI Transparency

While the need for transparency in AI is clear, achieving it is not always straightforward. There are three primary obstacles to AI transparency:

- Algorithmic complexity: Many AI systems, particularly those based on deep learning, involve complex algorithms and calculations. The complexity and the sheer volume of calculations make it challenging to trace back and understand the decision-making process.

- Trade secrets: Companies often protect their proprietary algorithms as trade secrets, preventing a clear understanding of how these AI systems make decisions.

- Data privacy: Personal data is a significant component of AI systems. Maintaining transparency while ensuring data privacy presents a significant challenge3.

The Way Forward: Enhancing AI Transparency

Given the challenges, it's clear that we need a multi-faceted approach to enhance AI transparency. This includes technical solutions, such as explainable AI, and policy measures, like open standards and regulations that require transparency.

By creating transparency in AI systems, we can understand how decisions are made and ensure that these systems align with our ethical and societal values. It's not just about making AI systems "explainable". It's about ensuring accountability and responsibility - that there's a clear line of sight to who (or what) is making decisions and on what basis.

While complete transparency may not always be achievable, it's crucial that we strive for as much transparency as possible. After all, as AI continues to permeate our lives, the need to ensure these systems are transparent, accountable, and responsible will only grow4.

This post is based on the original article "What is a 'black box'? A computer scientist explains what it means when the inner workings of AIs are hidden" by The Conversation.