Towards Ethical AI: A Guide for Businesses

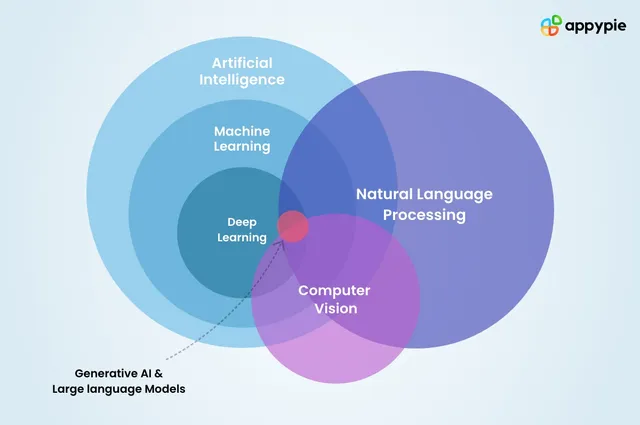

The rise of Artificial Intelligence (AI) technologies has significantly impacted various sectors, revolutionizing the way businesses operate.

The rise of Artificial Intelligence (AI) technologies has significantly impacted various sectors, revolutionizing the way businesses operate. AI's transformative power, however, also comes with risks. The rapid deployment of powerful new generative AI technologies, like ChatGPT, has raised concerns about potential harm and misuse. With the law struggling to keep up with these threats, businesses must take the initiative in implementing AI ethically1.

But what does it mean to implement AI ethically? The simple answer would be to align business operations with one or more of the many AI ethics principles developed by governments, multistakeholder groups, and academics. However, the application of these principles is not as straightforward as it might seem. Practicing AI ethics requires implementing management structures and processes that help an organization identify and mitigate threats1.

The Complexity of AI Ethics

The notion of AI ethics can be perplexing for organizations seeking clear guidance, and for consumers hoping for definitive protective standards. However, it's essential to recognize that the pursuit of ethical AI involves navigating grey areas and dealing with uncertainties1.

A study carried out from 2017 to 2019 interviewed 23 AI ethics managers from major companies using AI. Their roles varied from privacy officers to the emerging role of data ethics officers. The study revealed four key insights:

- AI poses substantial risks: Along with its many benefits, the use of AI in business can pose significant risks, including privacy issues, manipulation, bias, opacity, inequality, labor displacement, misinformation, hate speech, and intellectual property misappropriation1.

- Ethical AI is pursued for strategic reasons: Companies pursue ethical AI to maintain trust among customers, business partners, and employees, and to prepare for emerging regulations. Ethical AI is seen as a way to build a reputation as responsible stewards of people's data1.

- High-level AI principles are not enough: Managers need more than high-level AI principles to make specific decisions. Translating human rights principles into actionable questions for developers to produce more ethical AI systems proved to be a substantial challenge, indicating the need for practical guidelines and decision-making tools1.

- Organizational structures and procedures are crucial: In the face of ethical uncertainties, professionals turned to organizational structures and procedures. These include hiring an AI ethics officer, establishing an internal AI ethics committee, crafting data ethics checklists, reaching out to academics for alternative perspectives, and conducting algorithmic impact assessments1.

Responsible Decision-Making in AI Ethics

The key takeaway from the study is that companies aiming to use AI ethically should focus on responsible decision-making. They should not expect to find a simple set of principles that provide correct answers for every scenario. Instead, they should strive to make responsible decisions even in the face of limited understanding and changing circumstances1.

Without explicit legal requirements, companies can only do their best to understand how AI impacts people and the environment, stay abreast of public concerns, and keep up-to-date with the latest research and expert ideas. They should also seek input from a diverse set of stakeholders and seriously engage with high-level ethical principles1.

This approach changes the conversation around AI ethics. It encourages AI ethics professionals to focus less on identifying and applying AI principles and more on adopting decision-making structures and processes. These processes should ensure that they consider the impacts, viewpoints, and public expectations that should inform their business decisions. In this way, businesses can take a more proactive role in addressing the ethical implications of AI, fostering trust with their stakeholders, and contributing positively to society1.